Marketing

The AI data privacy conundrum: Is ChatGPT safe?

The raise of OpenAI’s ChatGPT has created many legitimate concerns around how AI technologies protect data privacy. Governments and organizations have started making moves and are now carefully analyzing the compliance of these tools with their own data privacy laws. So, is AI really safe? Let’s learn more.

PUBLISHED ON

It’s big. It’s new. And it’s scary. No, not the latest TV series reboot. We’re talking about artificial intelligence (AI) and ChatGPT privacy, and how these new tools are upending industries and making people, businesses, and governments wonder about the safety and privacy of their data and personal information.

These are powerful tools that possess potential even their creators don’t foresee. As you’ll see, people and companies around the world are already questioning the data security risks posed by AI and ChatGPT. How safe is your data when using these tools? How much control over your information do you still retain, and what can you do to preserve it, if anything?

In this overview, we’ll take a look at the data and privacy concerns around ChatGPT and AI tools like Google’s Bard and Microsoft’s Bing, how the world is adapting to its sudden rise, and what we can expect in the coming months and years.

Table of content

ChatGPT’s privacy policy

Addressing data inaccuracy

United States requests input from the public

Italy puts the brakes on ChatGPT

France’s CNIL puts out an action plan to address AI

Spain opens its own investigation into OpenAI

Concerns in the arts and entertainment industry

Sinch Mailjet News: We recently launched a new AI-powered writing assistant! Want to learn more? Check out our AI Copy Generator, which will help you save time and come up with amazing content for your campaigns.

Are AI tools like ChatGPT ready to protect user privacy?

ChatGPT, developed by OpenAI, launched in late 2022, and amassed over 100 million users in just a couple of months, by far the fastest acceleration of a new technology we’ve seen for awhile.

Unlike traditional search engines, ChatGPT is an AI chatbot – a user can ask questions, and it answers them much like a real person would, only faster. You can ask it to write an email, propose ten subject lines or CTAs, write social posts, create blog outlines, and so much more. It can also write computer code, compose poetry and riddles, and summarize notes from meetings.

How does it do this? ChatGPT has been trained on nearly all the data available online – websites, social media posts, books, government data, and more (up until September 2021, that is). It uses that storehouse to process answers to your questions and requests, but it is not currently capable of accessing real-time data as a search engine can.

But what if some of that data ChatGPT was fed wasn’t meant for public use? Does OpenAI have the right to use your sensitive data however it wants, just because you posted something online five years ago? And what about the information you give ChatGPT directly as part of your interaction with it?

ChatGPT’s privacy policy

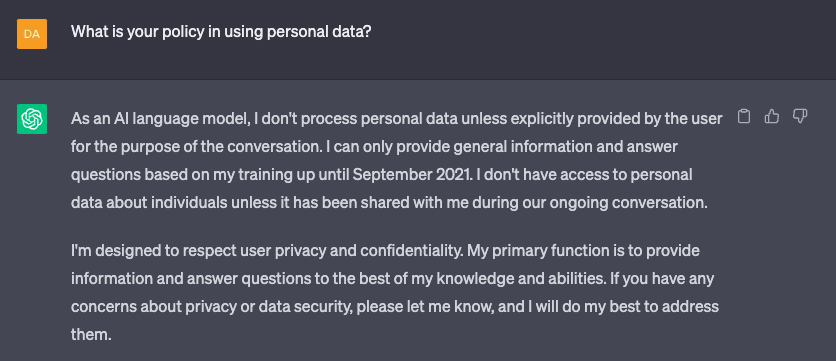

Well, who better to answer this question than ChatGPT itself.

According to ChatGPT’s own privacy policy, it collects user data from three places:

Your account information if you pay for the premium service.

Any information you enter into the chatbot.

Identifying data from your device such as your location and IP address.

None of that differs much from most other websites’ data collection, and social media has been battling privacy concerns related to these for years.

ChatGPT’s policy also states it may share this data with vendors, service providers, legal entities, affiliates, and its own AI trainers. But, if ChatGPT users specifically opt out, it won’t share identifying information like social security numbers or passwords.

So, in theory, while whatever you write in the chatbot could be used by the tool in ways you can’t control or imagine, there’s little chance of it being traced back to you. How does OpenAI manage this? According to their policies, after a retention period, your chat data is anonymized for further use, or if data is personal, deleted to protect privacy.

But it gets nebulous when you consider the difficulty in defining ‘personal’. What about medical questions? What about company information that may be entered into the chatbot? People may enter information into ChatGPT, not realizing that they might be publicizing content that should remain private.

To counter this growing concern, in May 2023, OpenAI added a feature that lets people toggle a setting to withhold their ChatGPT submissions from being used by company trainers and AI models as training data. ChatGPT is designed to learn as it goes, so using this feature means it does not improve its abilities as a result of that particular user’s engagement.

Additionally, users can also email themselves their chat history with ChatGPT, showing all the information they’ve submitted to the chatbot so far.

Interested in learning more about ChatGPT? Listen to Email’s Not Dead podcast episode ChatGPT, deliverability, email marketing and everything all at once or check out our post “The power and limitations of using ChatGPT for email marketing”.

Data privacy concerns in a world with AI software

When autocomplete features first came out, the privacy risks alarmed early users – for example, people would start typing in their social security number and watch the computer complete it.

If everything you submit to it becomes part of its knowledge base, then the information you type into ChatGPT could show up as a generative AI answer for someone else. And while that may seem harmless and, in many cases is, what happens if a company employee enters notes from a meeting and asks ChatGPT to summarize and edit them? ChatGPT now possesses private information about that company, its products, or its customers.

Suppose a relative or friend asks ChatGPT to suggest birthday gift and party ideas for someone else and includes the birthdate in their submission. Now ChatGPT knows that person’s birthdate. Again, OpenAI says that ChatGPT doesn’t share personal information and filters it out from its knowledge base.

But this concern has nevertheless led several big companies – including Amazon, JP Morgan, Verizon, and Accenture – to disallow their employees from using ChatGPT as part of their work activities.

“For the moment, there are a lot of uncertainties around the way large language models like ChatGPT process personal data, as well as the cybersecurity risks that might come with them. It’s unclear how these AI systems are using and storing inputted data and how they’re protecting this data from potential leaks, security breaches, and bad actors. The process of deleting that data once it’s uploaded in the system is also ambiguos. So it’s not surprising to see companies around the world releasing policies in regards to how employees use and leverage these tools.”

Darine Fayed, Vice President, Head of Legal EMEA at Sinch

Addressing data inaccuracy

Another concern relates not just to your personal information being used as part of ChatGPT’s AI software, but its accuracy.

We have the ‘right to be forgotten’, argues one critic, but is that possible with whatever ChatGPT already knows about us? And if what it thinks it knows about us isn’t true, how can you correct the error?

“AI systems like ChatGPT need enormous amount of data, which means getting data is sometimes more of a priority for those developing these systems than making sure they respects privacy regulations. Despite having tools in place to remove sensitive information automatically, there are always certain pieces of personal information that end up not being removed.”

Fréderic Godin, Head of AI at Sinch Engage

We tested this out by asking ChatGPT to tell us about one of our writers. We typed in, “Who is [first name last name] from [city]”. The AI claimed this writer used to work for a newspaper that, in fact, they’d never worked for. It drew this incorrect conclusion because the writer had written a couple of unpaid guest columns for that newspaper. ChatGPT offers no mechanism for fixing this error. This inaccurate information could lead to employment challenges, harassment, and bias, all based on something that isn’t true.

Could this become a real issue with ChatGPT? What if someone deliberately began writing false information about another person, and did so repeatedly?

How is the world adapting to the sudden rise of AI tools like ChatGPT?

The European Union advanced the cause for consumer email and data privacy protection with the release of the General Data Protection Regulation (GDPR). GDPR clearly states that you can’t just take people’s data and use it however you want – even if they post it online.

Email marketers all around the world have already adapted to protect privacy in email personalization efforts. They’ve also had to work to understand sometimes murky regulations when there’s more than one jurisdiction at play – like email privacy regulations between the EU and U.S.

Now, governments, institutions, and companies around the world are starting to look into how these other emerging technologies protect personal data, and what should be done to regulate their access to sensitive information.

“Now that AI technology has proven to be a real game changer, we’re starting to see an increased focus on data privacy across the board. For example, Microsoft is now making ChatGPT APIs for developers available in European data centers to respect GDPR laws and we also see an increased privacy awareness in the open source community working on ChatGPT open competitors. The same shift has already happened with lesser known generative tools like Github Copilot, which generates code for developers.”

Fréderic Godin, Head of AI at Sinch Engage

United States requests input from the public

Even in the United States, where data privacy hasn’t traditionally been a Federal or big-business focus, the rise of AI has generated concerns. In fact, in March over 1,800 experts – including experts and thought leaders across some of the US tech giants – signed an open letter requesting a six-month pause on the training of large language models to enable all stakeholders to jointly develop shared safety protocols.

The federal administration has also asked for the public’s input regarding potential regulations related to AI. In a recent press release, the US’ National Telecommunications and Information Administration (NTIA) stated, “President Biden has been clear that when it comes to AI, we must both support responsible innovation and ensure appropriate guardrails to protect Americans’ rights and safety.”

In mid-May, OpenAI’s CEO, Sam Altman, attended a congressional hearing on artifical intelligence. While no decisions were made during this first hearing, senators agreed on the need for clear guardrails to regulate the use of AI tools and highlighed the importance of taking action to avoid some of the mistakes made at the dawn of the social media era.

Italy puts the brakes on ChatGPT

Among other things, concerns over ChatGPT’s privacy regulations led Italy to demand OpenAI stop using personal data from Italian citizens as part of its training algorithm. OpenAI responded by ceasing its service to Italy while the investigation plays out. Italy listed four primary concerns with ChatGPT:

There are no age controls – minors could, in theory, be exposed to just about anything.

There’s inaccurate information about people, such as the example in this article.

OpenAI has no legal basis for collecting people’s information.

ChatGPT doesn’t ask for permission or provide notifications for people’s data to be used.

The Italian data protection authority, Garante per la Protezione dei Dati Personali, argued that there was “no legal basis” for using personal data to ‘train’ the ChatGPT AI algorithm. The people who put their information online never consented or imagined it would be used as data sets to train an AI system to answer questions from around the world. Should they get a say? It was not until OpenAI responded to the regulator inquiries to their satisfaction in late April that Italy restored the use of ChatGPT within their borders.

France’s CNIL puts out an action plan to address AI

Also in April 2023, France began investigating ChatGPT’s use of its people’s personal data as a result of several complaints.

As a result of this, France’s data protection watchdog, the Commission Nationale Informatique & Libertés (CNIL), released an artificial intelligence action plan in May to address the concerns.

Spain opens its own investigation into OpenAI

Another EU country that has raised concerns is Spain. In April, the Spanish data protection agency, the Agencia Española de Protección de Datos (AEPD), made a request for the the European Data Protection Board (EDPB) to start an investigation of the privacy concerns surrounding the AI software.

Simultaneously, Spain opened an investigation into OpenAI for a “possible breach” of data protection regulations.

Concerns in the arts and entertainment industry

But it’s not just traditional companies and governments that are speaking up about ChatGPT privacy issues.

Artists, content creators, and media companies, whose livelihoods depend entirely on the ownership of their work and whatever content they produce, are raising concerns about AI using their work to create its own.

The Hollywood Reporter’s March 29th 2023 issue discusses concerns about ChatGPT and DALL-E developing and writing scripts and creating images of AI-generated characters.

AI-generated images are gaining popularity and causing concerns among artists

While human creativity and decision-making probably won’t disappear entirely from the creative process, one of the biggest complaints is that generative AI is using the work of real artists to create its own, without compensating those artists. In other words, ChatGPT could not “write” scripts or poetry unless it had a storehouse of data from real artists to draw upon.

Copyright is a form of privacy. It says that a person or a company owns a piece of content and has specific, licensable rights associated with the ownership. But with ChatGPT and other AI tools like DALL-E in the mix, defining and crediting ownership has become much more challenging. That’s why AI tools have become a point of contention in union negotiations such as with the Writer’s Guild of America.

What does the future hold for data privacy and AI tools?

What does Mailjet have to say about all this? As you can see, ChatGPT and AI privacy concerns go far beyond email, though we are very much in the mix because ChatGPT can be used by marketers to enhance or streamline their work.

We expect that, with an increased global concern around data privacy, and with EU governments and tech giants leading the way, it’s likely that AI tools such as ChatGPT will be forced by new privacy laws and regulations to incorporate additional privacy features to protect their users.

The recent addition of the toggle that allows them to withhold their sensitive information from becoming part of ChatGPT’s algorithm is likely just the first of many such changes to come.

“With scale comes responsibility. I believe we’ll see the rise of hybrid ChatGPT models in which removing specific data upon request becomes really easy. Today that’s still very difficult to do on a trained version of the tool. But we’ve seen some of the tech giants implement features to allow users to exercise their right to be forgotten - in search engines, for example. It’s only a matter of time until we see these issues being addressed for ChatGPT too.”

Fréderic Godin, Head of AI at Sinch Engage

As every other company that wants to do business in the EU, OpenAI will have to adapt how they are processing personal data from EU citizens to European privacy laws.

Want to stay up to date with the latest news around privacy protection? Subscribe to our newsletter to learn more about how data regulations impact your marketing strategy.